1. Introduction

Welcome! This book serves as an all-in-one crash course in utilizing Metis.

Metis (commissioned in September 2023) is a 32-node CPU/GPU hybrid cluster running Red Hat Enterprise Linux 8.x operating system. 1PB of shared disk is provided by a Cray ClusterStor E1000 storage server. Each compute node is an HPE DL 385 Gen 10+ V2 server equipped with:

- 2x AMD EPYC 7713 CPUs 2.0 GHz 64-core processors

- 251-1259 GB RAM, 1 x 4TB SSD scratch disk drives

- 1 x NVIDIA A100 GPU, Amper™ architecture, 40 GB RAM each card

- All 32 nodes are connected via a 200 Gbps Infiniband network

To learn more about Metis, you can see the METIS layout and specification.

Primary Purpose

The goal of this book is to allow faculty at NIU to hit the ground running with their research.

We want you to focus on completing your work - not getting the hardware to work.

This book will teach you the skills to help focus on writing your application from the ground up.

Additionally, should a step confuse you, the final product of every project example in this book can also be found in this book's repository.

Explored Use Cases

There are six use cases covered here, with increasing levels of control over Metis:

- Chapter 2.1.1 - Running a C++ project

- No additional configuration

- PBS only

- Chapter 2.1.2 - Running a CUDA project

- Loading CUDA via the

modulecommand - PBS only

- Loading CUDA via the

- Chapter 4.1 - Running a language not installed on Metis, such as Python 3.11

- Downloading a pre-built Docker Image with

pythonversion 3.11 installed - PBS with Docker via Podman

- Downloading a pre-built Docker Image with

- Chapter 4.2 - Running packages not installed on Metis with GPU passthrough

- Downloading a pre-built Docker Image with the requirements for CUDA

- Passing through GPUs to Docker

- PBS with Docker and NVIDIA Container Toolkit via Podman

- Chapter 4.3 - Running virtually any project using custom Docker Images

- Writing, building, and publishing your own Docker Image

- Passing through GPUs to Docker

- PBS with Docker and NVIDIA Container Toolkit via Podman

- Chapter 5.1 - SSH Automation

- Demonstrates programmatic submission of PBS jobs via SSH for the purpose of fitting Metis into existing systems.

Where Should I Read to?

Cases Where Docker May Not Be Needed

If your application is either of the following, you shouldn't use Docker.

- Native C, Go, or Python applications with pre-installed or no dependencies

- OpenMPI-based applications

If it's one of those two, chapter 2 will be of great use!

The following chapters may not be as useful, as they touch primarily on Docker.

Cases Where Docker Is Needed

If your application is any of the following, it's highly recommended to use Docker:

- Applications which require a different operating system

- Applications that are not pre-installed and easier to setup using docker than natively (consult with crcdhelpdesk@niu.edu)

If you only need CPU-based computation, chapters 2-2.1 and 4.1 will teach you everything you need.

If you need GPU passthrough or have a very complicated project, it is recommended to read this book in its entirety!

1.1. Connecting to Metis

The Metis cluster is easily accessible via the SSH protocol.

Each operating system has various possible SSH clients - we will be using the OpenSSH client, as it is pre-installed on most operating systems and very straightforward.

Windows 10 and 11

As of 2024, both Windows 10 and Windows 11 have the OpenSSH client pre-installed. If you don't have it installed, update your operating system using the Windows Updater.

To connect, open either Windows PowerShell or Command Prompt, and run the following:

PS C:\...\> ssh you@metis.niu.edu

When prompted, enter your temporary password.

On your first login, you will be prompted to create a new password. Ensure that it's something memorable, but very secure!

Then, close your session:

[you@metis ~]$ exit

And re-login to test your new password:

PS C:\...\> ssh you@metis.niu.edu

Linux and MacOS

Most major distributions and the latest versions of MacOS have the OpenSSH client installed.

Run the following:

$ ssh you@metis.niu.edu

When prompted, enter your temporary password.

On your first login, you will be prompted to create a new password. Ensure that it's something memorable, but secure!

Then, close your session:

[you@metis ~]$ exit

And re-login to test your new password:

$ ssh you@metis.niu.edu

1.2. Remote Workspaces in VSCode

If you encounter issues, see the official VSCode documentation!

This guide is primarily applicable to Windows users, as the graphical integrated development enviroment it provides can make development much easier.

However, development on Metis is also possible and easy, as the majority of popular terminal-based editors are preinstalled on the login nodes:

Similarly, the two most popular build tools are also installed:

Installing VSCode and Remote Explorer

If you haven't already, first install Visual Studio Code. Note that Visual Studio Code and Visual Studio are not the same thing.

Next, navigate to the Extensions tab, and install the Remote Development extension by Microsoft. Then, restart VSCode.

Adding Metis as a Remote Host

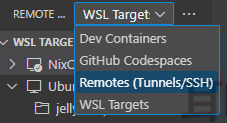

On the left panel, click on Remote Explorer:

![]()

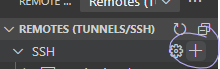

Then, make sure you are in the Remotes (Tunnel/SSH) section:

Next, create a new SSH host:

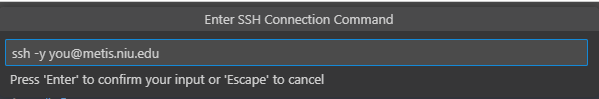

This will prompt you for your SSH command. It is slightly easier to add the -y flag, as seen below:

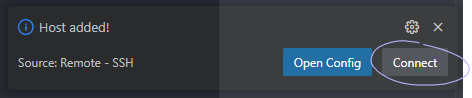

It will then as you where to save your SSH configuration. It doesn't really matter which you pick, but the option in your Users folder is ideal.

After choosing a location, you'll see a prompt in the bottom right, on which you should click Connect:

This will open a new VSCode window, where you are now connected to Metis graphically!

Adding a Folder

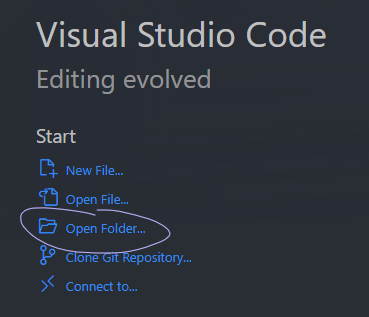

When this new window opens, you can click the Open Folder option to choose your first folder:

It's recommended you start with /home/you.

If you've been allocated a project, you may also want to open /lstr/sahara/your_project.

2. Basic Metis Usage

This first chapter will provide two into-the-fire projects that will teach you the core systems of Metis. This will be done through a simple C++ project, followed by an optimized version written with CUDA.

It's recommended to have a minimal knowledge of C++, CUDA, and Linux / Bash. If you haven't used any of these three before, or if it's been a while, linked below are some introductory resources:

These next two chapters lay the foundational skills needed to use the advanced techniques in the following chapters, and it is highly recommended that you read them before proceeding!

Overview of the Chapters

Chapter 2.1 - C/C++ on Metis

A general overview of how to get started with HPC distributed computing tactics on Metis in C++/C/CUDA.

Chapter 2.2 - Rust on Metis

A advanced look at the various types of hardware acceleration available through the Rust Programming Language.

2.1. C/C++ on Metis

Chapter 2.1.1: Building a C++ Project from the Ground Up

- Goals: Familiarize with basic commands and job submission on Metis.

- C++ Boilerplate: Create and run a basic "Hello, World" C++ program with computational loops.

- PBS Basics: Write a PBS job script to run your C++ program on compute nodes.

- Execution: Compile and run the C++ program locally and via PBS.

- Outcome: You will be able to understand job submission, the PBS script structure, and basic module commands.

Chapter 2.1.2: Building a CUDA Project from the Ground Up

- Goals: Learn to use CUDA for GPU programming on Metis.

- CUDA Boilerplate: Write a CUDA program to achieve the same task as in Chapter 1.1 but using GPU acceleration.

- CUDA Modules: Install and use the CUDA compiler (nvcc) with module commands.

- Execution: Compile and run your CUDA program, observing performance improvements.

- PBS for CUDA: Adapt the PBS script to load CUDA modules and compile with nvcc.

- Outcome: You will be able to leverage CUDA for faster computation and understand the structure of both CUDA programs and PBS scripts.

2.1.1. Building a C++ Project from the Ground Up

Associated CRCD Documentation: Project Example and PBS

You can find the code mentioned in this chapter in this book's repository!

This introductory project will teach you the absolute minimal nessecary information to create a basic C++ project on the Metis supercomputer.

Before we tackle more robust and complex technologies such as CUDA or OpenMPI, our goal is to familiarize ourselves with Metis before abstracting and building upon our understanding.

We'll instead opt to start with the most basic of programs - "Hello, World" (with, of course, a computationally intensive task) - to get started!

Goals

- Get a feel for the

modulecommands - Get a feel for the PBS Professional job submission system

- Understand the layout of a

.pbsjob script file - Get a feel for the

qsubcommand

C++ Boilerplate

First, let's start by creating a folder for our projects, then a folder for C++, and finally a folder for this project:

$ mkdir /lstr/sahara/<your_project>/<you>

$ mkdir /lstr/sahara/<your_project>/<you>/cpp

$ mkdir /lstr/sahara/<your_project>/<you>/cpp/cpp_on_metis

$ cd /lstr/sahara/<your_project>/<you>/cpp/cpp_on_metis

Let's start by creating a main.cpp file with the following contents:

#include <iostream>

int main () {

// Say hello to the user

std::cout << "Hello, Metis!" << std::endl;

// Initialize our counter variables

unsigned long long int counter = 0;

unsigned long long int number_of_divisible_by_two = 0;

unsigned long long int number_of_divisible_by_three = 0;

unsigned long long int number_of_divisible_by_five = 0;

// First, iterate through a 3D grid to get to our block

for ( int grid_z = 0; grid_z < 1000; grid_z++ ) {

for ( int grid_y = 0; grid_y < 100; grid_y++ ) {

for ( int grid_x = 0; grid_x < 100; grid_x++ ) {

// Second, iterate through the 3D block

for ( int block_z = 0; block_z < 10; block_z++ ) {

for ( int block_y = 0; block_y < 10; block_y++ ) {

for ( int block_x = 0; block_x < 10; block_x++ ) {

counter += 1;

if ( counter % 2 == 0 )

number_of_divisible_by_two += 1;

if ( counter % 3 == 0 )

number_of_divisible_by_three += 1;

if ( counter % 5 == 0 )

number_of_divisible_by_five += 1;

}

}

}

}

}

}

// Provide our results to the user

std::cout << std::endl

<< "- Numbers divisible by two: " << number_of_divisible_by_two << std::endl

<< "- Numbers divisible by three: " << number_of_divisible_by_three << std::endl

<< "- Numbers divisible by five: " << number_of_divisible_by_five << std::endl;

return 0;

}

This program does two things - it says hello to the user, and then takes count of the numbers divisible by 2, 3, and 5 from 0 up to 10 billion.

This is done with multiple nested loops - the reason for which will be explained, and the code optimized, in the following chapter on CUDA.

For now, what's apparent and important is that this is a computationally intensive task!

Next, let's build and run this code. By default, Metis users have GCC and G++ (version 11.3.0) preinstalled, which we will now use:

$ g++ -o hello_world main.cpp

$ ./hello_world

The calculation should take 23 seconds, after which we should see our results! However, it will run faster on the compute nodes, and you can allocate more resources. It will run exponentially faster when employing CUDA or OpenMPI, which we will touch on in the next chapter!

Getting Started with PBS

We are not currently making full use of Metis with this current setup. What we just ran our code on is called the login node, which has nowhere near the amount of computational power that is available to the compute nodes, which are where computationally intensive or time-consuming programs should be run.

But how do we do so?

Metis has many users, and each user may have various types of programs, each program with varying hardware requirements. As such, Metis uses a resource manager and job scheduling system by Altair, called PBS Professional.

In order to make use of this program, we must describe to the system what we need from it, which could be things such as:

- CPU cores

- CPU count

- RAM size

- GPU chunks

- Estimated runtime

...and more.

To do so, we use a PBS script file - a bash script with embedded PBS directives.

Let's get started by creating a run.pbs file with the following contents:

#!/bin/bash

#PBS -N hello_world

#PBS -j oe

#Note - on Metis

# Nchunks<=32, for GPU chunks

# Nchunks<=4096/Ncpus for CPU-only chunks

# (run 'shownodes' command to find the number of free cpus)

# Ncpus<=128, the total number of CPUs per node is 128

# NPmpi<=Ncpus, the total number of CPUs allocated for MPI tasks,

# request NPmpi=Ncpus for non-OPENMP jobs

# Ngpus==1, the total number of GPUs per node is 1

# X<=256, 28 of 32 Metis modes have 256 GB of RAM

# special jobs can request up to 1024 GB of RAM (4 nodes)

#

# Below, we request two chunks;

# each chunk needs 8 CPUs, 1 MPI processes, 1 GPU card, and 2 GB RAM

#PBS -l select=1:ncpus=8:mpiprocs=1:ngpus=1:mem=251gb

#PBS -l walltime=00:15:00

# When to send a status email ("-m abe" sends e-mails at job abort, begin, and end)

#--PBS -m ae

#--#PBS -M account@niu.edu

# Navigate to our working directory

PROJECT_DIRECTORY=/lstr/sahara/<your_project>/<you>/cpp/cpp_on_metis

echo "The job's working directory is $PROJECT_DIRECTORY"

cd $PROJECT_DIRECTORY

# Install GCC

echo ""

echo "Loading GCC..."

module purge; module load gcc/gcc-12.3.0

module list

echo "Done!"

# Compile our code

echo ""

echo "Compiling code..."

g++ main.cpp -o hello_world

echo "Done!"

# Run our binary

echo ""

echo "Executing binary..."

./hello_world

echo "Done!"

# Clean up our binary

rm ./hello_world

Before we move on, let's dissect what this does.

1. #!/bin/bash

2.

3. #PBS -N hello_world

4. #PBS -j oe

5.

6. #Note - on Metis

7. # Nchunks<=32, for GPU chunks

8. # Nchunks<=4096/Ncpus for CPU-only chunks

9. # (run 'shownodes' command to find the number of free cpus)

10. # Ncpus<=128, the total number of CPUs per node is 128

11. # NPmpi<=Ncpus, the total number of CPUs allocated for MPI tasks,

12. # request NPmpi=Ncpus for non-OPENMP jobs

13. # Ngpus==1, the total number of GPUs per node is 1

14. # X<=256, 28 of 32 Metis modes have 256 GB of RAM

15. # special jobs can request up to 1024 GB of RAM (4 nodes)

16. #

17. # Below, we request two chunks;

18. # each chunk needs 8 CPUs, 1 MPI processes, 1 GPU card, and 2 GB RAM

19. #PBS -l select=1:ncpus=8:mpiprocs=1:ngpus=1:mem=251gb

20. #PBS -l walltime=00:15:00

21.

22. # When to send a status email ("-m abe" sends e-mails at job abort, begin, and end)

23. #--PBS -m ae

24. #--#PBS -M account@niu.edu

...

Lines starting with #PBS are not comments, rather, they are PBS-specific commands!

The following lines are important to understand:

-

Line 1 is a shebang which specifies that the file's commands are to be interpreted by bash.

-

Line 3 specifies the name of our file.

-

Line 19 specifies the hardware requirements for our job

To learn more about specifying hardware requirements, see Chapter 6.4.1.

-

Line 20 specifies the estimated runtime of our job

-

Lines 23 and 24 specify options for recieveing emails regarding various events

Adding a sends mail on abort. Adding b sends mail on start. Adding e sends mail on end.

To learn more about recieving emails, see Chapter 6.4.1.

-

#PBS -j <n | oe>orqsub -j <n | oe>Specifies whether the standard error stream should be merged with the standard output stream.

Specifying

oemeans that bothstderrandstdoutwill be in the same output file.Specifying

n, or not specifying at all, means they will be in different files.$ qsub -j n run.pbs 20000.cm $ ls hello_world.o20000 hello_world.e20000 -

#PBS -m <n | a*b*e*>orqsub -m <n | a*b*e*>Specifies when mail about your job should be sent, with the following key:

- To send mail when it aborts, add

a - To send main when it begins, add

b - To send main when it ends, add 'e'

- To not send mail, specify

nor do not use this directive.

- To send mail when it aborts, add

For this job, none of this needs to be modified. The next section, however, will need to be:

...

26. # Navigate to our working directory

27. PROJECT_DIRECTORY=/lstr/sahara/<your_project>/<you>/cpp/cpp_on_metis

28. echo "The job's working directory is $PROJECT_DIRECTORY"

29. cd $PROJECT_DIRECTORY

...

Be sure to replace any instances on line 27 of <your_project> and <you> with your Metis project and username!

The reason for this only becomes relevant if you have interest in creating non-C++ projects or automating your job submission, so it is worth noting that you can replace /lstr/sahara/<your_project>/<you>/cpp/cpp_on_metis with $PBS_O_WORKDIR if you would like. This will be populated with where the job is run from.

Next, we will familiarize ourselves with the module commands, which are used on lines 31-36:

...

31. # Install GCC

32. echo ""

33. echo "Loading GCC..."

34. module purge; module load gcc/gcc-12.3.0

35. module list

36. echo "Done!"

...

The module commands are somewhat akin to a package manager, allowing you to load packages ("modulefiles") into your environment.

Unlike you, the compute node does not have gcc pre-installed. So to make it available to the compute node, we must install it, done in the following fashion:

- Line 34 clears all packages with

module purge, then installs GCC withmodule load gcc/gcc-12.3.0. - Line 35 lets you see what's currently installed with

module list.

This process for installing a package is the same on both the login and compute nodes. To see what packages are available to you, you can run module av. To narrow your search by a specific key word, use module av <keyword>.

...

38. # Compile our code

39. echo ""

40. echo "Compiling code..."

41. g++ main.cpp -o hello_world

42. echo "Done!"

43.

44. # Run our binary

45. echo ""

46. echo "Executing binary..."

47. ./hello_world

48. echo "Done!"

49.

50. # Clean up our binary

51. rm ./hello_world

...

The remaining lines are what you are accustomed to, they use the same build command from before, then run the binary, and finally clean up any artifacts.

Launching a Job with PBS

We're ready to go! All that's left is to start our job, which can be done easily with the following command:

$ qsub run.pbs

The output will look something like this:

18681.cm

This tells us the ID number of our job. Wait around 30 seconds for the job to finish, and list the contents of the directory!

$ ls

hello_world.o18681 main.cpp run.pbs

Reading the output from our job:

$ cat hello_world.o18681

The job's working directory is /lstr/sahara/<your_project>/<you>/cpp/cpp_on_metis

Loading GCC...

Currently Loaded Modulefiles:

1) gcc/gcc-12.3.0

Done!

Compiling code...

Done!

Executing binary...

Hello, Metis!

- Numbers divisible by two: 5000000000

- Numbers divisible by three: 3333333333

- Numbers divisible by five: 2000000000

Done!

Closing Thoughts

Congratulations! You've successfully launched your first job on the Metis supercomputer.

This is an impressive achievement. Those who are satisfied with the performance of their programs and are comfortable with only using the C family may even be able to stop here.

However, Metis is capable of much, much more.

In the next chapter, we will discuss utilizing CUDA to weaponize the power of graphics card programming to drastically reduce the computation times of our programs, as well as learning more about the module and PBS-related commands.

2.1.2. Building a CUDA Project from the Ground Up

Associated CRCD Documentation: Project Example and PBS

You can find the code mentioned in this chapter in this book's repository!

The next part of this introductory chapter will teach you how to build, compile, and run a CUDA program from the ground up on Metis.

CUDA stands for Compute Unified Device Architecture, and it is proprietary NVIDIA-distributed software that allows developers to perform matrice-based operations at unbelievable speeds using the heavily optimized CUDA cores found only on NVIDIA GPUs.

This chapter will teach you how to run CUDA code on Metis, but it will not teach you how to write it!

There are many fantastic resources on how to write it, some of which are linked below:

- (NVIDIA) An Even Easier Introduction to CUDA

- (cuda-tutorial) Introduction to CUDA

- (NVIDIA) CUDA Runtime API Reference

- (NVIDIA) CUDA Driver API Reference

Goals

- Learn how to use the

modulecommands on the login node - Learn how to use the

qstatcommand to view a running or completed job

CUDA Boilerplate

If you did not in the previous section, start by creating a folder for our projects, then a folder for CUDA projects, and finally a folder for this project:

$ mkdir /lstr/sahara/<your_project>/<you>

$ mkdir /lstr/sahara/<your_project>/<you>/cuda

$ mkdir /lstr/sahara/<your_project>/<you>/cuda/cuda_on_metis

$ cd /lstr/sahara/<your_project>/<you>/cuda/cuda_on_metis

Let's start by creating a main.cu file with the following contents:

#include <iostream>

/// A kernel function designed to calculate the number of

/// numbers divisible by two, three, and five

///

/// # Arguments

/// * `d_number_of_divisible_by_two` - The number of numbers divisible by two

/// * `d_number_of_divisible_by_three` - The number of numbers divisible by three

/// * `d_number_of_divisible_by_five` - The number of numbers divisible by five

__global__ void calculate(

unsigned long long int * d_number_of_divisible_by_two,

unsigned long long int * d_number_of_divisible_by_three,

unsigned long long int * d_number_of_divisible_by_five

) {

int grid_x = blockIdx.x;

int grid_y = blockIdx.y;

int grid_z = blockIdx.z;

int block_x = threadIdx.x;

int block_y = threadIdx.y;

int block_z = threadIdx.z;

unsigned long long local_counter =

(grid_z * 100 * 100 * 10 * 10 * 10) +

(grid_y * 100 * 10 * 10) +

(grid_x * 10 * 10) +

(block_z * 10 * 10) +

(block_y * 10) +

block_x + 1;

unsigned long one = 1;

if (local_counter % 2 == 0) {

atomicAdd(d_number_of_divisible_by_two, one);

}

if (local_counter % 3 == 0) {

atomicAdd(d_number_of_divisible_by_three, one);

}

if (local_counter % 5 == 0) {

atomicAdd(d_number_of_divisible_by_five, one);

}

}

int main() {

// Say hello to the user

std::cout << "Hello, Metis!" << std::endl;

// Host variables

unsigned long long int h_number_of_divisible_by_two = 0;

unsigned long long int h_number_of_divisible_by_three = 0;

unsigned long long int h_number_of_divisible_by_five = 0;

// Device variables

unsigned long long int * d_number_of_divisible_by_two;

unsigned long long int * d_number_of_divisible_by_three;

unsigned long long int * d_number_of_divisible_by_five;

// Allocate memory on the device with the correct sizing

cudaMalloc( &d_number_of_divisible_by_two, sizeof(unsigned long long int) );

cudaMalloc( &d_number_of_divisible_by_three, sizeof(unsigned long long int) );

cudaMalloc( &d_number_of_divisible_by_five, sizeof(unsigned long long int) );

// Copy the memory from the host to the device

cudaMemcpy( d_number_of_divisible_by_two, &h_number_of_divisible_by_two,

sizeof(unsigned long long int), cudaMemcpyHostToDevice );

cudaMemcpy( d_number_of_divisible_by_three, &h_number_of_divisible_by_three,

sizeof(unsigned long long int), cudaMemcpyHostToDevice );

cudaMemcpy( d_number_of_divisible_by_five, &h_number_of_divisible_by_five,

sizeof(unsigned long long int), cudaMemcpyHostToDevice );

// Define our grid's dimensions

dim3 gridDim(100, 100, 10);

// Define each block's dimensions

dim3 blockDim(10, 10, 10);

// Run our calculation

calculate<<<gridDim, blockDim>>>(d_number_of_divisible_by_two, d_number_of_divisible_by_three, d_number_of_divisible_by_five);

cudaDeviceSynchronize();

// Copy the memory back to our machine

cudaMemcpy(&h_number_of_divisible_by_two, d_number_of_divisible_by_two, sizeof(unsigned long long int), cudaMemcpyDeviceToHost);

cudaMemcpy(&h_number_of_divisible_by_three, d_number_of_divisible_by_three, sizeof(unsigned long long int), cudaMemcpyDeviceToHost);

cudaMemcpy(&h_number_of_divisible_by_five, d_number_of_divisible_by_five, sizeof(unsigned long long int), cudaMemcpyDeviceToHost);

// Provide our results to the user

std::cout << std::endl

<< "- Numbers divisible by two: " << h_number_of_divisible_by_two << std::endl

<< "- Numbers divisible by three: " << h_number_of_divisible_by_three << std::endl

<< "- Numbers divisible by five: " << h_number_of_divisible_by_five << std::endl;

// Free the memory

cudaFree(d_number_of_divisible_by_two);

cudaFree(d_number_of_divisible_by_three);

cudaFree(d_number_of_divisible_by_five);

return 0;

}

This program does the exact same thing as the previous section, with one key difference - it makes use of the CUDA runtime.

Instead of using indicied loops, we run our program using the compute systems of CUDA.

- Our outer loop's dimensions are replaced by the CUDA (thread) block grid, 1-3D grid containing (thread) block.

- Our inner loop's dimensions are replaced by the CUDA thread block, which are a 1-3D block containing the threads our kernel function will be executed on.

In our program, we use the maximum number of dimensions, effectively creating a 6D matrix. Because each each block is aware of its coordinates on the grid it lies on, and each thread the coordinates of the block it sits in, we can use sneaky math to calculate which number the old "counter" variable each of the ten billion threads translates to.

If you would like to learn more about CUDA, the resources in the introductory section of this paragraph are greatly recommended.

Loading Modules on the Login Node

However, unlike our previous project which used g++, the CUDA compiler, nvcc, is not pre-loaded.

To load it, we will use the module commands mentioned briefly in the previous section. The module system loads and unloads an evironment for specific application packages not part of the operating system.

First, let's list the modules related to cuda with the following command:

$ module av cuda

-------------------------------------------------- /etc/modulefiles --------------------------------------------------

cuda/cuda-7.5 cuda/cuda-8.0 cuda/cuda-11.5 cuda/cuda-11.8 cuda/cuda-11.8-rocky8 cuda/cuda-12.2

We see a variety of versions. For the sake of this guide, we will be using cuda/cuda-11.8.

Next, let's clean up our modules, and load CUDA:

$ module purge

$ module load cuda/cuda-11.8

$ module list

Currently Loaded Modulefiles:

1) cuda/cuda-11.8

Finally, we're ready to go! Let's compile and run our program:

$ nvcc -o hello_world main.cu

$ ./hello_world

Hello, Metis!

- Numbers divisible by two: 50000000

- Numbers divisible by three: 33333333

- Numbers divisible by five: 20000000

You will notice a nearly instantaneous completion time of 0.5 seconds.

Such is the power of graphical programming!

Launching a CUDA Program with PBS

For the most part, the run.pbs file will look similar to the version from the previous chapter.

Create a run.pbs file with the following contents:

#!/bin/bash

#PBS -N hello_world_cuda

#PBS -j oe

#Note - on Metis

# Nchunks<=32, for GPU chunks

# Nchunks<=4096/Ncpus for CPU-only chunks

# (run 'shownodes' command to find the number of free cpus)

# Ncpus<=128, the total number of CPUs per node is 128

# NPmpi<=Ncpus, the total number of CPUs allocated for MPI tasks,

# request NPmpi=Ncpus for non-OPENMP jobs

# Ngpus==1, the total number of GPUs per node is 1

# X<=256, 28 of 32 Metis modes have 256 GB of RAM

# special jobs can request up to 1024 GB of RAM (4 nodes)

#

# Below, we request two chunks;

# each chunk needs 8 CPUs, 8 MPI processes, 1 GPU card, and 16 GB RAM

#PBS -l select=1:ncpus=8:mpiprocs=1:ngpus=1:mem=251gb

#PBS -l walltime=00:15:00

# When to send a status email ("-m abe" sends e-mails at job abort, begin, and end)

#--PBS -m ae

#--#PBS -M account@niu.edu

# Navigate to our working directory

PROJECT_DIRECTORY=/lstr/sahara/<your_project>/<you>/cuda/cuda_on_metis

echo "The job's working directory is $PROJECT_DIRECTORY"

cd $PROJECT_DIRECTORY

# Install GCC

echo ""

echo "Loading CUDA"

module purge; module load cuda/cuda-11.8; module load gcc/gcc-11.3.0

module list

echo "Done!"

# Compile our code

echo ""

echo "Compiling code..."

nvcc -o hello_world main.cu

echo "Done!"

# Run our binary

echo ""

echo "Executing binary..."

./hello_world

echo "Done!"

# Clean up our binary

rm ./hello_world

There are a few notable differences.

- Our project name is

hello_world_cudainstead ofhello_world. - Our project directory is

.../hello_world_cudainstead of.../hello_world. - Instead of loading GCC, we loaded CUDA (

module load cuda/cuda-11.8). - Instead of compiling with G++ (

g++ -o hello_world main.cpp), we compiled with CUDA (nvcc -o hello_world main.cu).

Be sure to replace any instances of <your_project> and <you> with your Metis project and username!

Launching our Job with PBS

We're ready to go! All that's left is to start our job, which can be done easily with the following command:

$ qsub run.pbs

The output will look something like this:

18681.cm

This tells us the ID number of our job. Wait around 30 seconds for the job to finish, and list the contents of the directory!

$ ls

hello_world_cuda.o18681 main.cu run.pbs

Reading the output from our job:

$ cat hello_world.o18681

The job's working directory is /home/<your_account_username>/projects/cuda/cuda_on_metis

Loading GCC...

Currently Loaded Modulefiles:

1) gcc/gcc-12.3.0

Done!

Compiling code...

Done!

Executing binary...

Hello, Metis!

- Numbers divisible by two: 5000000000

- Numbers divisible by three: 3333333333

- Numbers divisible by five: 2000000000

Done!

It's also worth noting that you can use the qstat command to view the status of a job:

$ qstat -x 18681

Job id Name User Time Use S Queue

---------------- ---------------- ---------------- -------- - -----

18681.cm hello_world z1994244 00:00:02 F short

The -x flag means you will recieve output even if the job has concluded.

The documentation for this command, as well as qsub, can be found below:

There are also other useful commands such as qdel (terminates a job):

Closing Thoughts

Once again, congratulations! You have just harnessed the power of the NVIDIA hardware on Metis.

The boilerplate from this project will be enough to get almost any CUDA project up and running. For those who recieve enough of a performance improvement to satisfy your needs, you may be able to stop here.

For tasks that require even further optimization, Metis supports OpenMPI, a message passing interface which allows for massively parallel computation across multiple CPUs/Metis nodes.

Metis has modules containing GCC, CUDA, and OpenMPI for your convenience:

$ module av openmpi

-------------------- /etc/modulefiles ---------------------

openmpi/openmpi-1.8.8-gcc-11.4.0

openmpi/openmpi-4.0.7-gcc-9.5.0-cuda-11.8

openmpi/openmpi-4.1.1-gcc-11.3.0-cuda-11.8

openmpi/openmpi-4.1.5-gcc-8.5.0-cuda-11.8

openmpi/openmpi-4.1.5-gcc-11.4.0-cuda-11.8

openmpi/openmpi-4.1.5-gcc-12.3.0-cuda-12.2

openmpi/openmpi-4.1.6-gcc-11.4.0-cuda-11.8

Using a combination of both OpenMPI for coordinating large-scale tasks across many processors and CUDA for handling tasks best accelerated by GPU programming will allow you to fully harness the hardware of Metis.

2.2. Rust on Metis

Rust is a highly unique language that has C-level performance without C-esque memory issues.

No segfaults, no race conditions, no risk of another Therac-25.

Rust has multiple features positioning it as an ideal language for HPC:

- Rich ecosystem: Libaries for CUDA, parallelism, and distributed computing

- Memory safety: No leaking memory, no unexpected crashes at the end of your workload

- Blistering performance: With compiler optimizations, Rust can outperform C

- Revealing type system: Understand your data and its output before and after it's processed

Chapter 2.2.1: A Parallel Rust Project, from the Ground Up

This chapter will teach you:

- The basics of Rust, tailored for HPC

- How to compile and execute Rust code with Cargo

- How to launch a Rust program with PBS

- Trivially writing parallel code with the

rayoncrate

Chapter 2.2.2: Cross-Node Computation with MPI and Rust

This chapter will teach you:

- How to prepare your environment for MPI development

- The basics of MPI with openMPI as a backend

- How to use the

mpicrate in Rust for cross-node computation and communication

2.2.1. A Parallel Rust Project, from the Ground Up

You can find the code mentioned in this chapter in this book's repository!

Rust is a massive language with many intricate types that can be used to facilitate the representation of almost anything imagineable.

However, we'll be focusing on only a few, namely due to limitations imposed by the MPI standard - we can't trivially pass data that isn't supported by their API.

These types are laid out in the documentation for the mpi crate here, but to summarize:

- Signed Integers:

i8,i16,i32,i64,isize - Unsigned Integers:

u8,u16,u32,u64,usize - Floating-Point Numbers:

f32,f64 - Booleans:

bool

Don't overthink it - you probably want f64 or i32/i64 in nearly every case. Use usize for indexing. If you come from C++, you can find a nice guide here that shows what's what!

I'll run you through the basics, but I won't keep you long - there are plenty of great guides that do a better job teaching it than I ever could.

First, variables are declared with the following syntax:

let [mut] var: <type> = <expression>;

Variables are immutable by default. Here's an example where we create a (mutable) vector of f64s:

#![allow(unused)] fn main() { let mut nums: Vec<f64> = Vec::new(); }

Rust also has arguably the best macro support out of any language, one such example being the vec!(<num>; <len>) macro that fills an array out:

#![allow(unused)] fn main() { let mut nums: Vec<f64> = vec!(0.0f64; 100_000_000); }

Rereading above, you can see that the declaration expects an expression. Almost everything is an expression, including block expressions, meaning the following is completely valid Rust code:

#![allow(unused)] fn main() { let mut nums: Vec<f64> = { let mut inner_nums = Vec::new(); for _ in 0..100_000_000 { inner_nums.push(0); } inner_nums }; }

...where _ is a wildcard "forget-about-it" operator, since we don't care about the index here.

Notice the implied return at the end of the block - we could equivalently write return inner_nums;, but it's more "Rust-y" to do it this way!

Getting Started

Let's get started with an example. Metis comes with cargo installed, which is the everything-bagel for development in Rust.

First, create a new project:

$ mkdir -p ~/projects/rust/basic-rayon

$ cd ~/projects/rust/basic-rayon

$ cargo init .

$ cargo run

You'll be met with a kind Hello, World!. Nice work, you're basically a Rust developer now.

All Rust source code is in the src directory, with the entrypoint being main.rs. Let's rewrite this to start strong with a program that generates a whole bunch of random floats!

Creating a Vec of Random Numbers

First, we'll need the rand crate (a Rust library) to make random numbers. We'll also go ahead and add the rayon crate for later:

$ cargo add rand

$ cargo add rayon

Let's now write our main function!

use rand::prelude::*; fn main() { let mut nums: Vec<f64> = vec!(0.0; 1_000_000); rand::rng().fill(&mut nums[..]); println!("{nums:?}"); }

Run with cargo run, and you'll be met with a slew of output. Nice work! We'll sorta black box what exactly is happening with rand, but to summarize:

use rand::prelude::*; // Import `rand` fn main() { let mut nums: Vec<f64> = vec!(0.0; 1_000_000); // Make a zero vector rand::rng().fill(&mut nums[..]); // Fill it with random floats println!("{nums:?}"); }

Notice that we're using the println! macro - we know it's a macro because of the bang (!) - to format the nums vector into its debugging representation with :?.

This is required because unlike a primitive type like f64, there's no standard string representation of a vector:

[0, 1, 2, 3] // like this?

0, 1, 2, 3 // maybe this?

0 1 2 3 // what about this?

Sequentially Calculating the Average

Well, alright, we've gone ahead and created our vector of random f64s. How do we calculate the average?

Functions in Rust use the following syntax:

fn <name> ( [arg1: <type>], [arg2: <type>], ... ) [-> <type>] {

...

}

Arguments are optional, but must have specified types. The return type for a function is assumed to be (), an empty tuple.

This can somewhat be thought of as void from C++, but it's not the same - () is a Zero-Sized Type that occupies absolutely no memory. If that sounds insane, I thought so too, but it's legit - the docs on it are pretty interesting.

Jumping back to function syntax, here's a rudimentary example of an add function:

#![allow(unused)] fn main() { fn add ( num_1: f64, num_2: f64 ) -> f64 { num_1 + num_2 } }

...notice again the implicit return, Rust's neat like that!

So to write our average function, we're going to take advantage of Rust's absolutely phenomenal Iterator type, which has an outrageous number of useful methods. We'll write a function which uses two for our average:

#![allow(unused)] fn main() { fn sequential_average ( nums: &[f64] ) -> f64 { nums.into_iter().sum::<f64>() / (nums.len() as f64) } }

Let's break this down.

- 1.)

&[f64]is a reference to an array off64s - 2.)

.into_iter()converts our array reference into anIterator<f64> - 3.)

.sum()summates ourIterator<f64>into onef64 - 4.)

.len()returns ausize, which we can cast to an f64

And this works! If we append it to our main function (consider removing the println!(...)):

fn main ( ) { ... // Test the sequential average let start = Instant::now(); let avg = sequential_average(&nums); let duration = start.elapsed(); println!("Average: {} in {:?}", avg, duration); ... }

When testing with a much larger number, it's actually pretty quick, it gets the job done for 100,000,000 elements in about ~900ms on Metis!

Very quick - Rust is fast - but we can be faster. Let's talk about rayon.

Parallel Computation with rayon

To get started with rayon, let's import its prelude and something to help us measure:

#![allow(unused)] fn main() { use rayon::prelude::*; use std::time::Instant; }

Now, what's unique about rayon in our case is the ParallelSlice that it offers. It allows you to effectively convert our regular slice into a slice that can be easily operated on in parallel.

I'm gonna throw this at you all at once, but I'll break it down after, I promise!

#![allow(unused)] fn main() { fn parallel_average ( nums: &[f64] ) -> f64 { let sum: f64 = nums .par_chunks(nums.len() / 100) .map(|chunk| { chunk.into_iter() .map(|&n| n) .sum::<f64>() }) .sum(); sum / (nums.len() as f64) } }

Our function has the same inputs and outputs as its sequential equivalent. Similarly, we want to start by creating an iterator.

Instead of using .into_iter(), which creates an iterator of elements); we'll use .par_chunks(<n>), which creates an iterator of chunks which are n length big.

Next, we use .map(<closure>), which takes a closure that should accept a chunk and return its sum. For the sake of brevity, assume that a closure is a lambda function from C++, they're pretty similar here.

Just like the previous sequential implementation, we create an iterator and summate it. The sole difference is that since .par_chunks creates chunks that are &[&f64] instead of &[f64], we dereference them to their actual values with .map(|&n| n).

Lastly, we divide the sum by the length to create our average. Let's add testing code to our main function:

fn main ( ) { ... // Test the sequential average let start = Instant::now(); let avg = sequential_average(&nums); let duration = start.elapsed(); println!("Average: {} in {:?}", avg, duration); ... }

All together, our program should come out as the following:

use rand::prelude::*; use rayon::prelude::*; use std::time::Instant; fn main() { // Create a vector of n random integers let mut nums: Vec<f64> = vec!(0.0; 100_000_000); rand::rng().fill(&mut nums[..]); // Test the parallel average let start = Instant::now(); let avg = parallel_average(&nums); let duration = start.elapsed(); println!("Average: {} in {:?}", avg, duration); // Test the sequential average let start = Instant::now(); let avg = sequential_average(&nums); let duration = start.elapsed(); println!("Average: {} in {:?}", avg, duration); } fn sequential_average ( nums: &[f64] ) -> f64 { nums.into_iter().sum::<f64>() / nums.len() as f64 } fn parallel_average ( nums: &[f64] ) -> f64 { let sum: f64 = nums .par_chunks(nums.len() / 100) .map(|chunk| { chunk.into_iter() .map(|&n| n) .sum::<f64>() }) .sum(); sum / (nums.len() as f64) }

Lastly, let's compile and not in the debug profile, as cargo run does, but in release mode:

$ cargo build --release

Compiling basic-rayon v0.1.0 (/nfs/ihfs/home_metis/z1994244/projects/rust/basic-rayon)

Finished `release` profile [optimized] target(s) in 0.34s

$ ./target/release/basic-rayon

Average: 0.5000479638601427 in 21.319388ms

Average: 0.5000479638602693 in 126.604707ms

Nicely done! And wow - sure makes a difference, doesn't it?

2.2.2. Cross-Node Computation with MPI and Rust

You can find the code mentioned in this chapter in this book's repository!

What is OpenMPI, and How Does it Differ from OpenMP?

I'll start by loosely contrasting the functionality of the two:

- OpenMP is for shared memory across multiple processors/threads

- OpenMPI is for distributed memory across multiple systems

OpenMP is something that's semi-comparable to our previous approach to multithreading. It's a lot beefier, but the author of this documentation generally discourages the use of it - recommending to instead use either language parallelism features (for simplicity) or OpenMPI (for drastically improved performance).

How OpenMPI Works

MPI is a protocol for a (M)essage-(P)assing (I)nterface.

There's four concepts core to MPI:

- Universe - The collection of all nodes. On Metis, this is abstracted away by PBS Professional, so don't think too hard about it.

- World - The collection of all MPI processes and their intercommunication layers. You can think of this as the "meeting room", where each process has a headset and microphone to talk to oneanother.

- Size - The number of MPI processes in the World.

- Rank - The index representing this MPI process in the World.

When you launch an MPI program, all programs with the same binary and memory. In fact, if you didn't use the rank indicator anywhere in the program, they'd be identical in most cases, simply duplicating output size times.

Here's an example in Rust that does just that:

use mpi::traits::*; use anyhow::{Context, Result}; fn main ( ) -> Result<()> { let universe = mpi::initialize() .context("Failed to initialize MPI.")?; let world = universe.world(); let size: i32 = world.size(); println!("Size: {size}"); Ok(()) }

To get this to run, you'll need to do the following:

$ mkdir -p ~/projects/rust/basic-mpi

$ cd ~/projects/rust/basic-mpi

$ cargo init .

$ cargo add anyhow

$ cargo add mpi

$ module purge

$ module load openmpi/openmpi-5.0.7-gcc-14.2.0-cuda-12.8

$ cargo run

For an MPI run with size 3, you'd get:

Size: 3

Size: 3

Size: 3

Neat, but why run the same thing in multiple places?

Rank-Based Inter-process Logic and Communication

Things start to get interesting quick when you consider the rank:

use mpi::traits::*; use anyhow::{Context, Result}; fn main ( ) -> Result<()> { let universe = mpi::initialize() .context("Failed to initialize MPI.")?; let world = universe.world(); let size: i32 = world.size(); let rank: i32 = world.rank(); println!("Size: {size} - Rank: {rank}"); Ok(()) }

Running this, we get a different result:

Size: 3 - Rank: 0

Size: 3 - Rank: 1

Size: 3 - Rank: 2

If you've been paying close attention, it probably just clicked why this is big - you can diverge in logic based on this rank.

Here's a full example for this logic at work:

use mpi::traits::*; use rand::prelude::*; use anyhow::{Context, Result}; const NUM_ELEMENTS: usize = 1_000_000; fn calculate_random_avg ( n: usize ) -> f64 { let mut arr = vec!(0.0; n); rand::rng().fill(&mut arr[..]); arr.into_iter().sum::<f64>() / (n as f64) } fn main ( ) -> Result<()> { let universe = mpi::initialize() .context("Failed to initialize MPI.")?; let world = universe.world(); let size: i32 = world.size(); let rank: i32 = world.rank(); let local_elements = NUM_ELEMENTS / (size as usize) + (rank == 0) .then_some(NUM_ELEMENTS % (size as usize)) .unwrap_or(0); let local_avg = calculate_random_avg(local_elements); world.process_at_rank(0).send(&local_avg); if rank == 0 { let global_avg = (0..size) .fold(0f64, |acc, _| { acc + world.any_process().receive::<f64>().0 }) / (size as f64); println!("Global average of {global_avg} over {NUM_ELEMENTS} elements"); println!("Computed on {size} MPI processes"); } Ok(()) }

Lots of things just got added, so let's break it down.

- Main can fail. That's why it returns a

Result<()>, and also why theuniversehas a.context(...)?snippet - that wraps the error with additional information and early returns if it indeed contains an error. - Each and every node calculates the average of

(NUM_ELEMENT / size)elements, and then sends their result to the node with rank 0. - The node with rank 0, and only that node, receives the results from each node (including itself) and prints the result.

- Since our

mainfunction returns aResult<()>, we need to finish the function by returning theResult::Ok<()>variant, which can be shortened toOk<()>since we declared we'd be returning anResultin the function definition

Building and Executing a Rust MPI binary

Now, the above steps still work - but what's the point of running this on one process?

Let's get started with a basic 2-process PBS batchfile:

#!/bin/bash

#PBS -N basic-mpi

#PBS -l select=2:ncpus=1:mpiprocs=1

#PBS -l walltime=00:10:00

#PBS -j oe

#PBS -o basic-mpi.out

# Change to the directory from which the job was submitted

cd $PBS_O_WORKDIR

# Load MPI module (adjust to your cluster's modules)

echo ""

echo "[ Loading Modules ]"

module purge

module load openmpi/openmpi-5.0.7-gcc-14.2.0-cuda-12.8

# Path to your compiled Rust binary

echo ""

echo "[ Building Program ]"

cargo build --release

BIN=./target/release/basic-mpi

# Run with 2 processes (1 per node)

echo ""

echo "[ Starting Program ]"

mpirun -np 2 -hostfile $PBS_NODEFILE $BIN

Let's note two things. Firstly, we aren't using cargo run here, we're actually building a binary with the release profile. This is important because not only is a binary required by mpirun, but a binary built this way is significantly better optimized.

Secondly, we must coordinate that we're asking for 2 MPI processes in two places:

- The PBS directive (

select=2) mpirunwith (-np 2)

Final Results

Testing this, we get a successful output:

$ qsub run.pbs

69937.cm

$ cat basic-mpi.out

[ Loading Modules ]

Loading openmpi/openmpi-4.1.8-gcc-11.4.0-cuda-11.8

Loading requirement: gcc/gcc-11.4.0 cuda/cuda-11.8

[ Building Program ]

Compiling basic-mpi v0.1.0 (/nfs/ihfs/home_metis/z1994244/projects/rust/basic-mpi)

Finished `release` profile [optimized] target(s) in 0.42s

[ Starting Program ]

Global average of 0.4995294844859745 over 1000000 elements

Computed on 2 MPI processes

With this, we've successfully run a multi-node Rust program with distributed memory!

This approach is hyper-modern - you're getting the low-level performance of C and OpenMPI with the safety and opinionated predictability of Rust.

Very interesting stuff - Rust uniquely positions itself as a potential competitor in the HPC space with the aforementioned benefits.

3. User Environment Customization/Virtualization on Metis

Podman + Docker vs Singularity/Apptainer + Docker vs Conda vs Modulefiles

Metis provides several technologies to encapsulate environments and manage software dependencies. Each of these tools—Podman, Docker, Singularity/Apptainer, Conda, and Modulefiles—has different strengths and weaknesses, depending on your use case.

Any technology marked with a "⭐" is a strong candidate in its given feature.

Comparison Chart

| Feature | Podman + Docker | Singularity/Apptainer | Conda | Modulefiles |

|---|---|---|---|---|

| Use Case | ⭐ General development, CI/CD, image availability | ⭐ HPC environments, scientific computing | Managing isolated software environments | Dynamic loading of software on HPC clusters |

| Image/Package Repository | ⭐ Docker Hub (extremely large and varied) | Smaller ecosystem, focus on reproducibility | Anaconda repository (⭐ only if using Python or R) | Pre-installed software for the HPC cluster |

| Security | Rootless operation (Podman) | ⭐ High security in multi-user environments | No OS-level isolation | ⭐ Tied to user permissions on HPC |

| Integration with HPC | Limited, requires config | Optimized for HPC environments | Limited, not designed for HPC | Native integration with HPC systems |

| Ease of Use in HPC | Very easy, but requires some minimal configuration | Tedious but straightforward to work with | Simple for general use (but not HPC-optimized) | ⭐ Extremely easy to use, but requires cluster-specific knowledge |

3.1 Pros/Cons: Podman + Docker and Singularity/Apptainer + Docker

To learn more about Podman, see the official Podman Documentation, or a great beginner's tutorial!

To learn more about Apptainer, see the official Apptainer Documentation, or a comprehensive tutorial!

Metis has both Podman and Singularity installed. Both are software designed to allow non-root users to run containers on systems like Metis, where this is the case.

Podman is designed to effectively be a drop-in replacement for Docker on non-root systems, while Singularity is a tool specifically designed for HPC environments with performance, reproducibility, and security in mind.

Although this documentation does not cover Singularity, it is a very powerful tool that should be considered if you are looking to squeeze the maximum performance out of Metis.

Podman + Docker

Podman and Docker are containerization platforms that allow users to run and manage containers in isolated environments.

Pros:

- Large Ecosystem: Docker has an extensive library of images on Docker Hub.

- Rootless Operation (Podman): Podman is rootless by default, improving security without compromising functionality.

- Versatile: Ideal for general development, CI/CD pipelines, and application isolation.

Cons:

- Limited HPC Integration: Requires extra configuration to integrate with HPC systems, especially regarding MPI.

- Overhead: Containerization can add overhead compared to native execution, especially for complex MPI-based workflows.

Singularity/Apptainer

Singularity, now rebranded as Apptainer, is a containerization technology specifically designed for HPC environments. Apptainer allows users to encapsulate applications and their dependencies in containers that are highly portable and optimized for performance in multi-user systems.

Pros:

- Native OpenMPI Support: Singularity/Apptainer handles MPI seamlessly, outperforming Docker in HPC scenarios.

- Reproducibility: Ensures consistent performance and results, vital in scientific computing.

- Security: Designed for multi-user environments, ensuring other users can't interfere with your containers.

Cons:

- Smaller Ecosystem: Fewer available container images and less community support compared to Docker.

- Less Flexibility: Its focus on reproducibility can make certain application deployments more challenging.

- Complex Local Setup: Initial setup on local machines can be tricky, especially compared to Docker/Podman.

3.2. Pros/Cons: Conda

To learn more about Conda, see CRCD's documentation!

Conda is a popular package and environment management tool, widely used in scientific computing for managing Python and R environments. It allows users to install multiple versions of software and switch between them without affecting the system’s main environment.

Pros:

- Package Management: Supports a wide array of libraries, including Python, R, and C/C++ packages.

- Cross-platform: Works on most operating systems and is widely adopted in data science and machine learning communities.

- Virtual Environment Management: Allows easy creation of isolated virtual environments.

Cons:

- Not Containerized: Unlike Docker and Singularity, Conda environments are not isolated at the OS level, leading to potential conflicts with system libraries.

- Not HPC-optimized: While it works in HPC environments, it's not specifically designed for them. It lacks the strong security and performance optimizations of container-based solutions.

- Heavy on Disk: Conda environments can become quite large, consuming significant disk space.

3.3. Pros/Cons: Modulefiles

To learn more about Modulefiles or to find links to CRCD's documentation, see Chapter 6.3!

Environment Modules (or Modulefiles) are a system for dynamically modifying user environments via modulefiles. They are heavily used in HPC environments to load software environments on-demand without needing root privileges.

Pros:

- Lightweight: No overhead from containerization.

- HPC Optimized: Designed specifically for HPC environments, often with pre-built software optimized for the specific cluster. Easily the most optimized technology.

- Flexible: Allows for loading different software versions, easy to use and understand in HPC.

Cons:

- Not Portable: Modules are often tightly coupled to the cluster’s software stack, making them difficult to reproduce elsewhere.

- Manual Management: Requires explicit loading/unloading, making it less automated compared to container technologies.

- Complex and Administrator Dependent: You may need administrator intervention to add or modify system modules.

Using Podman + Docker on Metis

In this chapter, we will dive deep into Docker and PBS techniques, expanding on the foundational knowledge covered in Chapters 2.1 and 2.2.

We'll explore how to combine these techniques to create a robust workflow for your projects on Metis, including handling custom Docker images, leveraging GPU acceleration, and managing complex dependencies.

Overview of the Chapters

Chapter 4.1: Using Pre-Made Docker Images

- Goals: Understand the limitations of Metis modulefiles and learn how to circumvent them using Docker.

- Problem: Some software isn't available on Metis, creating modulefiles can be time-consuming or impossible due to custom dependencies

- Solution: Use Docker (via Podman) to run custom applications.

- Outcome: You will be able to run custom software avoiding the limitations of the Metis environment

Chapter 4.2: Using GPU Acceleration with Docker

- Goals: Learn how to enable GPU passthrough in Docker containers on Metis.

- Problem: GPUs are not accessible in Docker by default, and additional steps are required to set up NVIDIA drivers and CUDA.

- Solution: Configure Podman with specific flags and use NVIDIA's CUDA-enabled Docker images.

- Outcome: You will be able to leverage GPU acceleration for your Dockerized applications, significantly boosting performance.

Chapter 4.3: Creating Your Own Docker Base Images

- Goals: Gain the skills to create custom Docker images tailored to your project’s needs.

- Problem: Pre-made Docker images may not always meet the specific requirements of your project.

- Solution: Learn the basics of writing Dockerfiles, building custom images, and publishing them to public repositories.

- Outcome: You will be able to create, customize, and share Docker images, enabling a flexible and reproducible environment for your work.

4.1. Using Pre-Made Docker Images

Associated CRCD Documentation: PBS

You can find the code mentioned in this chapter in this book's repository!

We will first begin by using a language which is not among the modules which Metis provides, Python 3.11.

In actuality, Metis does offer Python, as seen below:

$ module av python

-------------------- /etc/modulefiles ---------------------

python/python-3.9.10 python/python-3.12.4

...but, at the time of writing this, it does not have Python 3.11, which is among the most commonly used versions.

So, how do we fix this?

Well, we ourselves can't fix the global modulefiles on Metis, which means under normal circumstances, we would have to reach out to Metis staff to have the module fixed - something that takes away from both your own time and the time of the Metis staff.

You are able to define your own modulefiles, but this is a time consuming task, and it can't solve everything.

Goals

- Learn how to use Podman and Docker

- Learn how to install dependencies via Podman's CLI

- Learn how to use Podman in a PBS script file

- Learn how to kill Podman to avoid uptime emails and alerts

The Problem

Modulefiles struggle or are outright impossible to create with any of the following cases:

- Packages which can only run on certain operating systems, and specific versions of those operating systems

- Packages which have dense dependency trees

- Packages which have circular dependencies

- Packages which need elevated permissions

- Packages with long build times, where a distributed binary may be preferred

- Closed-source or unfree packages (which are very common in machine learning!)

- Huge numbers of dependencies

This isn't to say it's impossible to manually build every single dependency for your project, and also include them manually.

However, this is an extremely time-consuming process, and time spent doing this will only take away from your core work.

Dependency installation should be a matter of lines, not weeks.

The Solution

Docker, an extremely powerful containerization and encapsulation tool that allows developers to define virtual machines with a level of granularity rarely found in modern computing. Docker allows you to select an operation system as a base, install packages and libraries, and define run behaviour.

We will be using an overlay on Docker called Podman. It allows us to use Docker containers despite not having elevated permissions on Metis. Understanding of Podman isn't required - all Docker commands can have docker replaced with podman (or in our case, /bin/podman).

If you haven't already, create your projects directory, a new directory for Docker projects, and finally a directory for this project:

$ mkdir /lstr/sahara/<your_project>/<you>

$ mkdir /lstr/sahara/<your_project>/<you>/docker

$ mkdir /lstr/sahara/<your_project>/<you>/docker/premade_image

$ cd /lstr/sahara/<your_project>/<you>/docker/premade_image

Next, let's create a main.py file with the following contents:

print( "Hello, Metis!" )

Now, how do we get Docker to run this file?

For your own projects, you can search the Docker Hub for programming languages, software, and more. You can also use a base image like ubuntu:22.04 or debian:bookworm, which contain nothing but the operating system with no additional packages or programming languages.

From there, you can use the exec command to install the languages or packages with that operating system's respective package manager. We will go over the usage of the exec command with examples shortly!

We'll start by downloading and running a Docker Image, which will be built on the Debian operating system version 12.6 "Bookworm", and include Python 3.11.9.

Note: If you see something like ERRO[0000] cannot find UID/GID for user z1994244: no subuid ranges found for user "z1994244" in /etc/subuid - check rootless mode in man pages., it's okay! This error sometimes occurs the first time you run a command, and if it does, simply wait a few seconds and run it again.

Downloading and starting our container:

$ /bin/podman run \

-v ./.:/home \

-w /home \

--name python_container \

-t -d \

python:3.12.5-bookworm

WARN[0000] Network file system detected as backing store. Enforcing overlay option `force_mask="700"`. Add it to storage.conf to silence this warning

f258979e09d0923ebb815b0b0baae9ae9cb2de18ace02a4aa282920c673073d9

The first line with the warning can be safely ignored. It's likely that by the time you are reading this, it's been silenced.

Next, let's run our Python script!

$ /bin/podman exec python_container python3 main.py

...

Hello, World!

Congratulations! You've just run a version of Python that's not installed on Metis at all. But, what if our Python script needed some dependencies?

Overwrite the main.py file with the following contents:

import numpy as np

print( "Hello, Metis!" )

If we try to run our script again, we get an error:

$ /bin/podman exec python_container python3 main.py

...

Traceback (most recent call last):

File "/home/main.py", line 1, in <module>

import numpy as np

ModuleNotFoundError: No module named 'numpy'

Let's create our Python virtual environment, and install numpy using the exec command! Run the following:

$ /bin/podman exec python_container python -m venv .venv

$ /bin/podman exec python_container .venv/bin/pip install numpy

Running our script again:

$ /bin/podman exec python_container .venv/bin/python3 main.py

...

Hello, Metis!

Nicely done! Lastly, let's kill and remove our container:

$ /bin/podman kill python_container

$ /bin/podman rm python_container

Again, congratulations! You've successfully downloaded a Docker Image, installed some dependancies, and run them on the login node!

Docker in PBS

Now, we just ran that Docker image on the login node, not the compute nodes. So how do we write a PBS file to automate what we just did?

Create a run.pbs file with the following contents:

#!/bin/bash

#PBS -N premade_image

#PBS -j oe

#Note - on Metis

# Nchunks<=32, for GPU chunks

# Nchunks<=4096/Ncpus for CPU-only chunks

# (run 'shownodes' command to find the number of free cpus)

# Ncpus<=128, the total number of CPUs per node is 128

# NPmpi<=Ncpus, the total number of CPUs allocated for MPI tasks,

# request NPmpi=Ncpus for non-OPENMP jobs

# Ngpus==1, the total number of GPUs per node is 1

# X<=256, 28 of 32 Metis modes have 256 GB of RAM

# special jobs can request up to 1024 GB of RAM (4 nodes)

#

# Below, we request two chunks;

# each chunk needs 8 CPUs, 8 MPI processes, 1 GPU card, and 16 GB RAM

#PBS -l select=1:ncpus=8:mpiprocs=1:ngpus=1:mem=251gb

#PBS -l walltime=00:15:00

# When to send a status email ("-m abe" sends e-mails at job abort, begin, and end)

#--PBS -m ae

#--#PBS -M account@niu.edu

PROJECT_DIRECTORY=/lstr/sahara/<your_project>/<you>/docker/premade_image

echo "This job's working directory is $PROJECT_DIRECTORY"

cd $PROJECT_DIRECTORY

# Enable linger for the user

echo ""

echo "Enabling linger for the user..."

loginctl enable-linger <your_account_name>

echo "Done!"

# Start the container

#

# There are five flags, most of which will never change:

# - `-v $PROJECT_DIRECTORY:/home` mounts the project directory to the `/home`

# directory in the container.

# - `-w /home` sets the working directory in the container to `/home`.

# - `-t` allocates a pseudo-TTY. This is useful for running the container in

# the background.

# - `-d` runs the container in the background.

#

# The last argument is the image name. This is the only thing that will change

# between projects, this is the name of the image we want to run.

#

# For instance, in this case, we are running the `python:3.12.5-bookworm` image:

# - `python` is the name of the image.

# - `3.12.5-bookworm` is the tag of the image, which specifies the version of the

# image we want to run.

#

# Millions of pre-built images are available on Docker Hub, and will likely

# already have an image that suits your needs! You can search for images here:

# https://hub.docker.com/

#

# Note: There may be many logs that are printed to the console when the container

# is started. Despite being error-level, this is normal, and you can ignore them.

echo ""

echo "Starting the container..."

/bin/podman run \

-v $PROJECT_DIRECTORY:/home \

-w /home \

--name python_container \

-t -d \

python:3.12.5-bookworm \

> /dev/null 2>&1 # You can remove this line if you want to see the logs!

echo "Done!"

# Run our python script

#

# The `exec` command runs a command in a running container. In this case, we are

# running the `python3 main.py` command in the `python_container` container.

#

# There is a generic error message, which can be ignored.

echo ""

echo "Running the python script..."

/bin/podman exec python_container .venv/bin/python3 main.py

echo "Done!"

# Kill the container

#

# The `kill` command stops a running container. In this case, we are stopping the

# `python_container` container.

echo ""

echo "Stopping the container..."

/bin/podman kill python_container \

> /dev/null 2>&1 # You can remove this line if you want to see the logs!

echo "Done!"

# Remove the container

#

# The `rm` command removes a container. In this case, we are removing the

# `python_container` container.

echo ""

echo "Removing the container..."

/bin/podman rm python_container \

> /dev/null 2>&1 # You can remove this line if you want to see the logs!

echo "Done!"

This is largly the same, and only two things need to be modified to fit your Metis account:

...

PROJECT_DIRECTORY=/lstr/sahara/<your_project>/<you>/docker/premade_image

echo "This job's working directory is $PROJECT_DIRECTORY"

cd $PROJECT_DIRECTORY

# Enable linger for the user

echo ""

echo "Enabling linger for the user..."

loginctl enable-linger <your_account_name>

echo "Done!"

...

Be sure to replace <your_account_name>, <your_project>, and <you> instances with your own information! The linger command is unique to Podman (Docker) jobs in PBS, and ensures it has the nessecary permissions to run your jobs.

With that, let's test our job!

$ qsub run.pbs

18712.cm

$ cat premade_image.o18712

This job's working directory is /lstr/sahara/<your_project>/<you>/docker/premade_image

Enabling linger for the user...

Done!

Starting the container...

Done!

Running the python script...

time="2024-08-16T14:57:08-05:00" level=warning msg="Network file system detected as backing store. Enforcing overlay option `force_mask=\"700\"`. Add it to storage.conf to silence this warning"

Error: can only create exec sessions on running containers: container state improper

Done!

Stopping the container...

Done!

Removing the container...

Done!

Lastly, we must kill off our Podman processes on the login node, or else we'll recieve emails about extended uptime.

There are many, so it's easier to kill instead everything under your username. This will close your shell connection, so please save any unfinished work before doing so.

This will cause additional load times next time you login to Metis (10-20 seconds), but is important to do.

pkill -U <your_account_username>

Closing Thoughts

Congratulations! You now have the skills needed to tackle most CPU-only applications.

You can modify the base image to fit the operating system, languages, and software you need! You can also add or modify exec commands to install more languages, libraries, or software to be able to load anything else your software might need.

If you'd like to learn more about the run, exec, kill, or rm commands, additional documentation can be found in the Conclusion and Helpful Resources chapter!

If your application does not make use of the GPU, and you have no interest in automation or integration, you likely don't need to read any further. If you do, then feel free to continue onto Chapter 3.2 - Using GPU Acceleration with Docker!

4.2. Using GPU Acceleration With Docker

Associated CRCD Documentation: PBS

You can find the code mentioned in this chapter in this book's repository!

Now we must address how to use GPU passthrough on Metis with Podman (Docker), which can quickly elevate our programs to higher performance with the power of GPU acceleration!

Goals

- Pass through a GPU to Podman

The Problem(s)

In order to do so, we must solve the following problems:

- 1 - Our GPUs are not passed through to Podman (Docker) by default

- 2 - NVIDIA drivers and the CUDA runtime are not installed on most Docker Images

- 3 - NVIDIA device files aren't always loaded on the compute node

The Solution(s)

1 - Our GPUs are not passed through to Podman (Docker) by default

To solve this, we add two flags to our /bin/podman command:

$ /bin/podman run ... \

--device nvidia.com/gpu=all \

--security-opt=label=disable \

some/image

This will ensure that the GPU is passed through to our Docker Container.

2 - NVIDIA drivers and the CUDA runtime are not installed on most Docker Images

CUDA drivers are notoriously difficult to install, so it's highly recommended to use a base image that already has them pre-installed.

For the purpose of this example, we will be using NVIDIA's base image, which has CUDA pre-installed.

3 - NVIDIA device files aren't always loaded on the compute node

Occasionally, the /dev files for the NVIDIA GPUs disappear on compute nodes.

To solve this, we use a relatively hacky but functional solution - running a CUDA-based binary to force them to load.

For the sake of demonstration, we'll use the binary we developed in Chapter 2.2!

Implementation

First, let's create our project directory as we have in previous projects:

$ mkdir /lstr/sahara/<your_project>/<you>

$ mkdir /lstr/sahara/<your_project>/<you>/docker

$ mkdir /lstr/sahara/<your_project>/<you>/docker/premade_image_gpu

$ cd /lstr/sahara/<your_project>/<you>/docker/premade_image_gpu

Next, we need a binary that forces CUDA to load. We'll build the project from Chapter 2.1 and have it output here:

$ module purge

$ module load cuda/cuda-11.8

$ nvcc -o initialize_cuda /lstr/sahara/<your_project>/<you>/cuda/cuda_on_metis/main.cu

Finally, we'll implement everything mentioned above.

Create a run.pbs file with the following contents:

#!/bin/bash

#PBS -N premade_image_gpu

#PBS -j oe

#Note - on Metis

# Nchunks<=32, for GPU chunks

# Nchunks<=4096/Ncpus for CPU-only chunks

# (run 'shownodes' command to find the number of free cpus)

# Ncpus<=128, the total number of CPUs per node is 128

# NPmpi<=Ncpus, the total number of CPUs allocated for MPI tasks,

# request NPmpi=Ncpus for non-OPENMP jobs

# Ngpus==1, the total number of GPUs per node is 1

# X<=256, 28 of 32 Metis modes have 256 GB of RAM

# special jobs can request up to 1024 GB of RAM (4 nodes)

#

# Below, we request two chunks;

# each chunk needs 8 CPUs, 8 MPI processes, 1 GPU card, and 16 GB RAM

#PBS -l select=1:ncpus=8:mpiprocs=1:ngpus=1:mem=251gb

#PBS -l walltime=00:15:00

# When to send a status email ("-m abe" sends e-mails at job abort, begin, and end)

#--PBS -m ae

#--#PBS -M account@niu.edu

PROJECT_DIRECTORY=/lstr/sahara/<your_project>/<you>/docker/premade_image_gpu

echo "This job's working directory is $PROJECT_DIRECTORY"

cd $PROJECT_DIRECTORY

# Enable linger for the user

echo ""

echo "Enabling linger for the user..."

loginctl enable-linger <your_account_username>

echo "Done!"

# Initialize GPU device files by running our script with CUDA

echo ""

echo "Running a quick CUDA program..."

module purge; module load cuda/cuda-11.8

./initialize_cuda \

> /dev/null 2>&1 # You can remove this line if you want to see the logs!

echo "Done!"

# Start the container

#

# There are five flags, most of which will never change:

# - `-v $PROJECT_DIRECTORY:/home` mounts the project directory to the `/home`

# directory in the container.

# - `-w /home` sets the working directory in the container to `/home`.

# - `-t` allocates a pseudo-TTY. This is useful for running the container in

# the background.

# - `-d` runs the container in the background.

#

# The last argument is the image name. This is the only thing that will change

# between projects, this is the name of the image we want to run.

#

# For instance, in this case, `cuda:12.6.0-cudnn-runtime-ubuntu22.04`:

# - `cuda` is the name of the image.

# - `12.6.0-cudnn-runtime-ubuntu22.04` is the tag of the image, which specifies

# the version of the image, the base operating system, and any additional

# software that is included in the image.

#

# Millions of pre-built images are available on Docker Hub, and will likely

# already have an image that suits your needs! You can search for images here:

# https://hub.docker.com/

#

# Note: There may be many logs that are printed to the console when the container

# is started. Despite being error-level, this is normal, and you can ignore them.

echo ""

echo "Starting the container..."

/bin/podman run \

-v $PROJECT_DIRECTORY:/home \

-w /home \

--name cuda_container \

--device nvidia.com/gpu=all \

--security-opt=label=disable \

-t -d \

nvidia/cuda:12.6.0-cudnn-devel-ubuntu20.04 \

#> /dev/null 2>&1 # You can remove this line if you want to see the logs!

echo "Done!"

# Run our `nvidia-smi` command

#

# The `exec` command runs a command in a running container. In this case, we are

# running the `nvidia-smi` command in the `cuda_container` container.

#

# There is a generic error message, which can be ignored.

echo ""

echo "Running the \`nvidia-smi\` command..."

/bin/podman exec cuda_container nvidia-smi

echo "Done!"

# Kill the container

#

# The `kill` command stops a running container. In this case, we are stopping the

# `cuda_container` container.

echo ""

echo "Stopping the container..."

/bin/podman kill cuda_container \